Elon Musk. Stephen Hawking. Bill Gates.

Some of the richest (and best known) names in science and technology are worried about the future survival of mankind. These innovators are sounding the alarm, not about North Korea, nuclear war or even global warming, but something much more sinister: artificial intelligence.

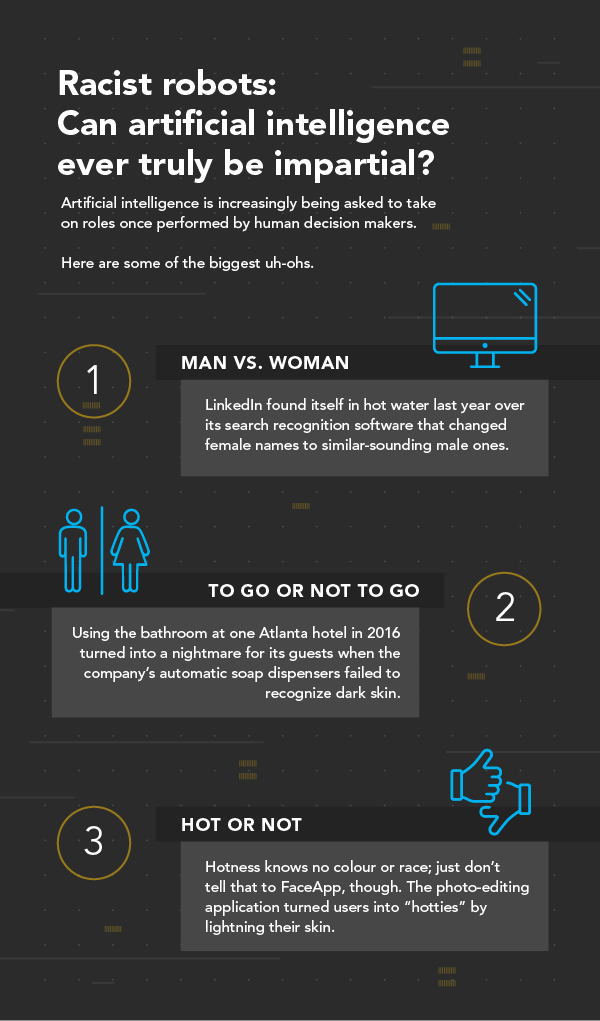

Hollywood has spent decades showcasing how dangerous artificially intelligent computers (think: Terminator, Ex Machina and more) can be. However some experts believe the bigger (and arguably more immediate) threat A.I. poses isn't from killer robots, but something far less sexy: computer-generated bias. When computers make decisions based on data skewed by humans it can topple economies and disrupt communities.

Helpful or harmful?

One of the most pivotal moments in A.I. history took place in 1996 when IBM's supercomputer, Deep Blue, beat chess champion, Garry Kasparov. For some, it signalled how far technology had come and how powerful the technology could soon become.

Since then, newspapers have produced countless stories about what an artificially intelligent future could look like. However, the reality is that A.I.is already here. In fact, machines lurk behind the millions of decisions that impact our every move, like what stories pop up in online newsfeeds and how much money banks lend its customers.

In a way, this makes the A.I. infinitely more dangerous. These algorithms shape public perception in ways that were once considered unimaginable.

"The idea of robots becoming smarter than humans and us losing our place in the totem pole is misplaced," @HumeKathryn.

What people should worry about instead is how machines are making big decisions based on little information. "What I found the greatest hurdle has to do with machine learning systems. They make inferences based on data that carries with it traces of bias in society. The algorithms are picking up on that bias and perpetuating it," explained Kathryn Hume, vice president of product and strategy for integrate.ai.

What comes next?

In theory, machines should offer up bias-free and objective decisions, but that's often not the case. Computers learn by reviewing examples fed to it and then use that information as a basis for future decisions. In layman terms, it means if you train a computer using biased information, it will end up replicating it.

One doesn't have to look too far to find examples of this phenomenon. In 2016, Pro Publica found learning software COMPAS was more likely to rate black convicts higher for future recidivism than their white counterparts. Last year Google's algorithm was likelier to show high-paying jobs to men than women, and online searches for CEOs regularly showed more white men than another other race or gender.

Breaking down bias in A.I.

Breaking down bias is possible. However, it takes work and a lot of it. Relying on more inclusive data can go a long way to fixing the problem.

"It's important that we be transparent about the training data that we are using, and are looking for hidden biases in it, otherwise we are building biased systems," said John Giannandrea, Google's chief A.I. expert, earlier this year.

Education is also a crucial part of the equation. Organizations like the Algorithmic Justice League are helping on that front. Among many things, they're educating the public about A.I. limitations and working to improve algorithmic bias.

"We in the data community need to get better at educating the public," adds Hume. "The superficial level sounds really scary and they will stymie the use of it. The tech community can help people who aren't technical community know what the stuff is and feel empowered to use it."

.webp)